Microsoft recently announced HDInsight, built on top of HortonWorks DataPlatform (a distribution of Hadoop similar to Cloudera). There is a developer preview of HDInsight available as a free download from Microsoft, which is very easy to setup. However, the developer preview is a single box solution and it is not possible to simulate a real world deployment with multi node clusters. You can create a 4 node hadoop cluster on Azure with a few clicks but it is prohibitively costly (and the cluster will be shutdown after free tier usage, if your account is a trial one). This is a step by step guide to setup a multi node cluster for free on your laptop using HortonWorks Data Platform. HDInsight is actually built on top of Hortonworks solution, so there won’t be many differences apart from some usability features and nice looking clients. So let’s get started with the setup:

What will we build?

We will build a Virtualbox Base VM with all the necessary software installed, sysprep it and spin linked clones from the base for better use of available resources, We will build a domain controller and add 4 VMs to the domain. You can achieve similar setup using HyperV too, using differential disks. We should end up with something like this:

Domain: TESLA.COM

Name of the VM

|

Description

|

IP Address

|

RAM

|

| HDDC | Domain Controller and Database host for Metastore | 192.168.80.1 | 1024 |

| HDMaster | Name node, Secondary Name node, Hive, Oozie, Templeton | 192.168.80.2 | 2048 |

| HDSlave01 | Slave/Data Node | 192.168.80.3 | 2048 |

| HDSlave02 | Slave/Data Node | 192.168.80.4 | 2048 |

| HDSlave03 | Slave/Data Node | 192.168.80.5 | 1024 |

In prod environments, HIVE, OOZIE and TEMPLETON usually have separate servers running the services, but for the sake of simplicity, I chose to run all of them on a single VM. If you don’t have enough resources to run 3 data /slave nodes, then you can go with 2. Usually 2 slave nodes and 1 master is necessary to get a feel of real Hadoop Cluster.

System Requirements:

You need a decent laptop/desktop with more than 12 GB of RAM and a decent processor like core i5. If you have less RAM, you might want to run a bare minimum of 3 node cluster (1 master and 2 slaves) and adjust the available RAM accordingly. For this demo, check the way I assigned RAM in the table above. You can get away with assigning lesser memory to data nodes.

Software Requirements:

| Windows Server 2008 R2 or Windows Server 2012 Evaluation edition, 64 bit |

| Microsoft .NET Framework 4.0 |

| Microsoft Visual C++ 2010 redistributable, 64 bit |

| Java JDK 6u31 or higher |

| Python 2.7 |

| Microsoft SQL Server 2008 R2 (if you would like to use it for Metastore) |

| Microsoft SQL Server JDBC Driver |

| VirtualBox for virtualization (Or HyperV, if you prefer) |

| HortonWorks DataPlatform for Windows |

Building a Base VM:

This guide assumes that you are using VirtualBox for virtualization. The steps are similar even if you are using HyperV with minor differences like creating differencing disks before creating VM clones from it. Hadoop cluster setup involves installing a bunch of software and setting environment variables on each server. Creating a base VM and spinning linked clones from it makes it remarkably easier and saves lot of time and it helps creating more VMs within the available limits of your disk space compared to stand alone VMs.

1. Download and Install VirtualBox

2. Create a new VM for a base VM. I called mine MOTHERSHIP. Select 25 GB of initial space, allowing dynamic expansion. We will do a lot of installations on this machine and to make them work quick, assign maximum amount of RAM temporarily. At the end of the setup, we can adjust the RAM to a lesser value.

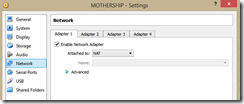

3. Enable two network adapters on your VM. Adapter1 connected to NAT (if you care about internet access on VMs). Adapter2 for Internal only Domain Network.

4. From Setting > Storage > Controller IDE , mount Windows Server 2008 R2 installation media iso.

5. Start the VM and boot from the iso. Perform Windows Installation. This step is straight forward.

6. Once the installation is done, set password to P@$$w0rd! and login.

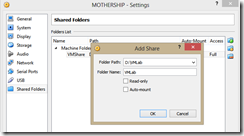

7. Install guest additions for better graphics, shared folders etc.

8. You will move a lot of file from your Host machine to the VM, so configuring a shared folder will help.

Install necessary software on BaseVM:

1. Login to the base VM you built above and install Microsoft .NET Framework 4.0

2. Install Microsoft 2010 VC ++ redistributable package.

3. Install Java JDK 6u31 or higher. Make sure that you select a destination directory that doesn’t have any spaces in the address during installation. For example, I installed mine on C:\Java\jdk1.6.0_31

4. Add an environment variable for Java. If you installed Java on C:\Java\jdk1.6.0_31, you should add that path as a new system variable. Open a Powershell windows as an admin and execute the below command:

[Environment]::SetEnvironmentVariable("JAVA_HOME","c:\java\jdk1.6.0_31","Machine")

5. Install Python. Add Python to Path. Use the command below in a powershell shell launched as Administrator

$env:Path += ";C:\Python27"

6. Confirm that the environment variables have been set properly by running %JAVA_HOME% from Run, it should open the directory where JDK was installed. Also, run Python from Run and it should open Python shell.

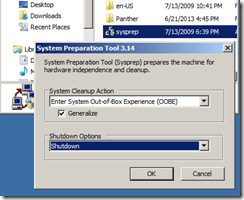

7. We are almost done with the Base VM. We can now generalize this image using sysprep. Go to Run, type sysprep and enter. It should open /system32/sysprep directory. Run sysprep.exe as an Admin. Select 'System Out of Box Experience’ , check Generalize and select Shutdown.

Create Linked Clones:

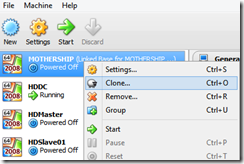

1. Go to VirtualBox, Right click on the Base VM you created above and select clone.

2. Give the VM an appropriate name. In my case, its HDDC for domain controller. Make sure you select Re-Initialize MAC Addresses of all network cards

3. In the Clone Type Dialog, select Linked Clone, click Clone.

4. Repeat steps # 1 – 3 for creating other VMs that will be part of your cluster. You should end up with HDDC, HDMaster and slave nodes - HDSlave01, HDSlave02, HDSlave03 (depending on your available resources)

5. Since we created these clones from the same base, it will inherit all settings, including memory. You might want to go to the properties of each VM and assign memory as you see fit.

Configuring Domain on HDDC

We will not go into details of setting up active directory. Google is your friend. But below are some steps specific to our setup.

1. Start HDDC VM and login.

2. VirtualBox creates funny computers names, even though your VM name is HDDC. Change the computer name to HDDC and reboot.

3. Go to the network settings and you should see two network adapters enabled. Usually ‘Network Adapter2’ is your domain only internal network. To confirm, you can go to VM settings while the VM is running, go to Network tab and temporarily simulate cable disconnection by unchecking cable connected. The Adapter attached to NAT is for internet and the one created as Internal is for Domain Network.

4. Go to properties of Domain Network Adapter inside the VM and set below ip configuration

5. Enable Active Directory services role and reboot. Use dcpromo.exe to create a new domain. I chose my domain name as TESLA.COM, named after the greatest scientist ever lived. If you need help configuring AD Domain, check this page. Post configuration and reboot, logon to the Domain Controller as TESLA\Administrator and create an active directory account and make it a Domain Admin, this will make things easier for you for the rest of setup.

6. Optionally, if you are planning on using this VM as Metastore for Hadoop cluster, you can install SQL Server 2008 R2. We will not go into the details of that setup. It is just a straight forward installation. Make sure you create a SQL Login which has sysadmin privileges. My login is hadoop. I have also created a couple of blank databases on it, namely hivedb, ooziedb.

Network Configuration and Joining other VMs to Domain

1. Log on to HDMaster as an Administrator

2. Find the Domain network Adapter (refer to step #3 in Configuring Domain section). Make sure your HDDC (Domain controller) VM is running.

3. Change the adapter settings: IP - 192.168.80.2, subnet mast – 255.255.255.0, Default Gateway – Leave it blank, Preferred DNS – 192.168.80.1

4. Right click on My Computer > Setting > Computer name, domain, workgroup settings > Settings and Change the computer name to HDMaster and enter the Domain name TESLA.COM. Enter Domain Admin login and password when prompted and you’ll be greeted with a welcome message. Reboot.

5. Logon to each of the remaining VMs and repeat steps #1 to 4 – the only difference is the IP address. Refer to the list of IPs under ‘What will we build’ section of this article. Preferred DNS remains same for all the VMs i.e, 192.168.80.1

Preparing The environment for Hadoop Cluster

We now have a domain and all the VMs added to it. We also installed all the necessary software to the base VM so all the VMs created from it will inherit those. It is now time to prepare the environment for hadoop installation. Before we begin, make sure ALL VMs have same date, time settings.

Disable IPV6 on ALL VMs:

Log on to each VM, open each network adapter and uncheck IPV6 protocol from adapter settings.

Adding firewall exceptions:

Hadoop uses multiple ports for communicating between clients and other services so we need to create firewall exceptions on all the servers involved in the cluster. Execute the code below on each VM of the cluster on a powershell prompt launched as Administrator. If you want to make it simpler, you can just turn off Firewall at the domain level altogether on all VMs. But we don’t normally do that on production.

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=50470

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=50070

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=8020-9000

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=50075

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=50475

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=50010

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=50020

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=50030

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=8021

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=50060

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=51111

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=10000

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=9083

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=50111

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=50070

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=8020-9000

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=50075

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=50475

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=50010

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=50020

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=50030

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=8021

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=50060

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=51111

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=10000

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=9083

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=50111

Networking Configurations and Edit Group Policies:

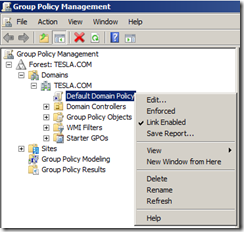

1. Logon to your Domain Controller VM, HDDC with a Domain Administrator account (ex: TESLA\admin)

2.Go to start and type Group Policy Management in the search bar. Open Group Policy Management.

3. If you don’t see your Forest TESLA.COM already, add a Forest and provide your domain name

4. Expand Forest –> Domain –> Name of your Domain –> right click on default domain policy –> edit

5. Group Policy Management Editor (lets call it GPM Editor) will open up and note that ALL the steps following below are done through this editor.

6. In GPM Editor, navigate to Computer Configuration -> Policies -> Windows Settings -> Security Settings ->System Services -> Windows Remote Management (WS-Management). Change the startup mode to Automatic

7. Go to Computer Configuration -> Policies -> Windows Settings -> Security Settings -> Windows Firewall with Advanced Security. Right click on it and create a new inbound firewall rule. For Rule Type, choose pre-defined, and select Windows Remote Management from the dropdown. It will automatically create 2 rules. Let them be. click Next, Check Allow The Connection.

8. This step is for enabling Remote Execution Policy for Powershell. Go to Computer Configuration -> Policies -> Administrative Templates -> Windows Components -> Windows PowerShell. Enable Turn on Script Execution. You’ll see Execution Policy dropdown under options, select Allow All Scripts

9. Go to Computer Configuration -> Policies -> Administrative Templates -> Windows Components -> Windows Windows Remote Management (WinRM) -> WinRM Service. Enable “Allow Automatic Configuration of Listeners” . Under Options, enter * (asterisk symbol) for IPV4 filter. Also change “Allow CredSSP Authentication” to Enabled.

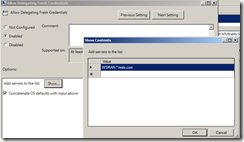

10. We configured WinRM Service so far, now it’s WinRM Client’s turn. Go to Computer Configuration -> Policies -> Administrative Templates -> Windows Components -> Windows Windows Remote Management (WinRM) -> WinRM Client. Set Trusted Hosts to Enabled and under Options, set TrustedHostsList to * (asterisk symbol) . In the same dialog, look for Allow CredSSP Authentication and set it to Enabled.

11. Go to Computer Configuration -> Policies -> Administrative Templates -> System -> Credentials Delegation. Set Allow Delegating Fresh Credentials to Enabled. Under options, click on Show next to Add Servers To The List and set WSMAN to * as shown below: (make a new entry like so: WSMAN/*.TESLA.COM, replace it with your domain here)

12. Repeat instructions in step#11 above, this time for property NTLM-only server authentication

13. Already tired? We are almost done. Just a couple more steps before we launch the installation. Go to Run and type ADSIEdit.msc and enter. Expand OU=Domain Controllers menu item and select CN=HDDC (controller hostname). Go to Properties -> Security -> Advanced –> Add. Enter NETWORK SERVICE, click Check Names, then Ok. In the Permission Entry select Validated write to service principal name. Click Allow and OK to save your changes.

14.On the Domain controller VM, launch a powershell windows an an administrator and run the following: Restart-Service WinRM

15. Force update gpupdate on the other VMs in the environment. Run this command: gpupdate /force

Define Cluster Configuration File

When you download HortonWorks Dataplatform for windows, the zip contains a sample clusterproperties.txt file which can be used as a template during installation. The installation msi depends on clusterproperties file for cluster layout definition. Below is my Cluster Configuration file. Make changes to yours accordingly.

#Log directory HDP_LOG_DIR=c:\hadoop\logs #Data directory HDP_DATA_DIR=c:\hdp\data #Hosts NAMENODE_HOST=HDMaster.tesla.com SECONDARY_NAMENODE_HOST=HDMaster.tesla.com JOBTRACKER_HOST=HDMaster.tesla.com HIVE_SERVER_HOST=HDMaster.tesla.com OOZIE_SERVER_HOST=HDMaster.tesla.com TEMPLETON_HOST=HDMaster.tesla.com SLAVE_HOSTS=HDSlave01.tesla.com, HDSlave02.tesla.com #Database host DB_FLAVOR=mssql DB_HOSTNAME=HDDC.tesla.com #Hive properties HIVE_DB_NAME=hivedb HIVE_DB_USERNAME=sa HIVE_DB_PASSWORD=P@$$word! #Oozie properties OOZIE_DB_NAME=ooziedb OOZIE_DB_USERNAME=sa OOZIE_DB_PASSWORD=P@$$word!

Installation

1. Keep all of your VMs running and logon to HDMaster, with domain admin account.

2. Create a folder C:\setup and copy the contents of HortonWorks Data Platform installation media you downloaded at the beginning of this guide.

3. Replace the clusterproperties.txt with the one you created under Define Cluster Config File section. This file is same for ALL the participant servers in the cluster.

4. open a command prompt as administrator and cd to C:\setup.

5. Type the below command to kick off installation. Beware of unnecessary spaces in the command. It took me a lot of time to figure out the space was the issue. Edit the folder path according to your setup. There is no space between HDP_LAYOUT and ‘=’.

msiexec /i "c:\setup\hdp-1.1.0-GA.winpkg.msi" /lv "hdp.log" HDP_LAYOUT="C:\setup\clusterProperties.txt" HDP_DIR="C:\hdp\Hadoop" DESTROY_DATA="no"

6. If everything goes well, you should see a success message.

7. Log on to each of the other VMs, and repeat steps from #2 to 6.

Congratulations, you have now successfully created your first Hadoop Cluster. You’ll see shortcuts like below on your desktop on each VM. Before starting Hadoop exploration, we need to start services on both Local and remote machines. Log on to the HDMaster server, go to hadoop installation directory and look for \hadoop folder. You’ll see a couple of batch files to start/stop services.

In the next blog post, we will write a sample MapReduce job using Pig and one using Python. Have fun!